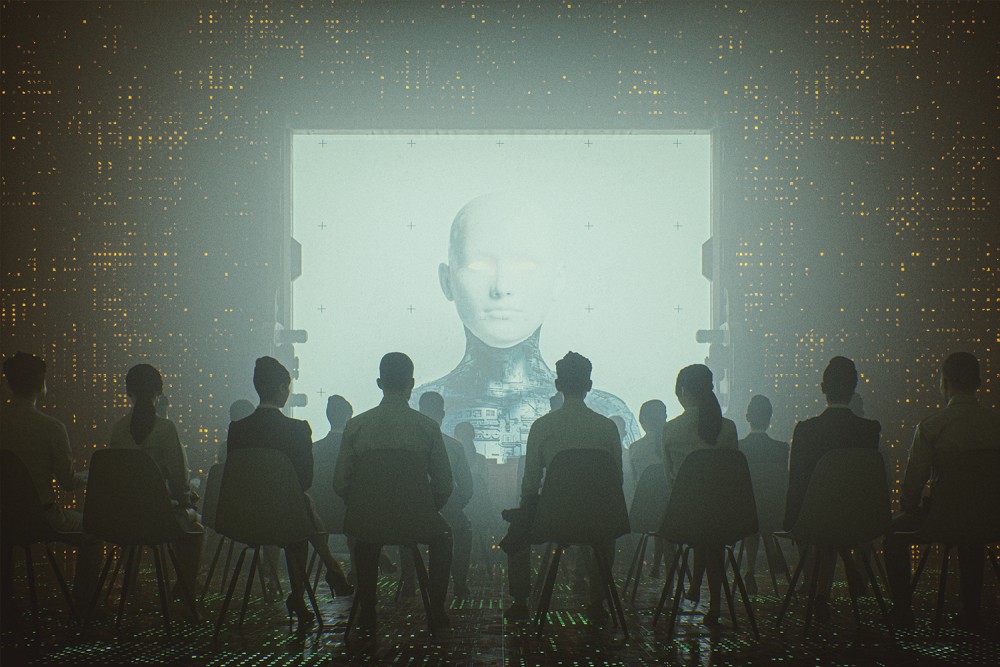

Artificial intelligence needs theology

What exactly is an “evil robot”? Who gets to define it?

(Image by gremlin / E+ / Getty)

“OpenAI Releases Plan to Prevent a Robot Apocalypse” read the headline of a December 19 Daily Beast article. The plan is to create a “preparedness team” to supplement the artificial intelligence company’s current safety efforts, which include mitigating the technology’s adoption of human biases such as racism and preventing machine goals from overriding human goals. The new team, led by MIT researcher Aleksander Madry, will monitor for potential catastrophic events involving AI, such as creating and deploying biological weapons, sabotaging an economic sector, or hacking into military systems to start a nuclear war.

The primary work of the preparedness team, Madry told the Washington Post, will be to prevent exploitation of the technology by people who approach it asking, “‘How can I mess with this set of rules? How can I be most ingenious in my evilness?’”

In this issue of The Century, theologian Katherine Schmidt writes about the role of the humanities in a world where AI is becoming increasingly powerful, generative, and autonomous. As corporations and policymakers grapple with the blurring lines between human agency and computer-generated agency, Schmidt argues that “ethical theory and ethics education” are vital. Further, she points out that theologians and philosophers are “uniquely qualified” to weigh in on the conversation, since they are skilled at addressing basic questions about truth, meaning, and agency.